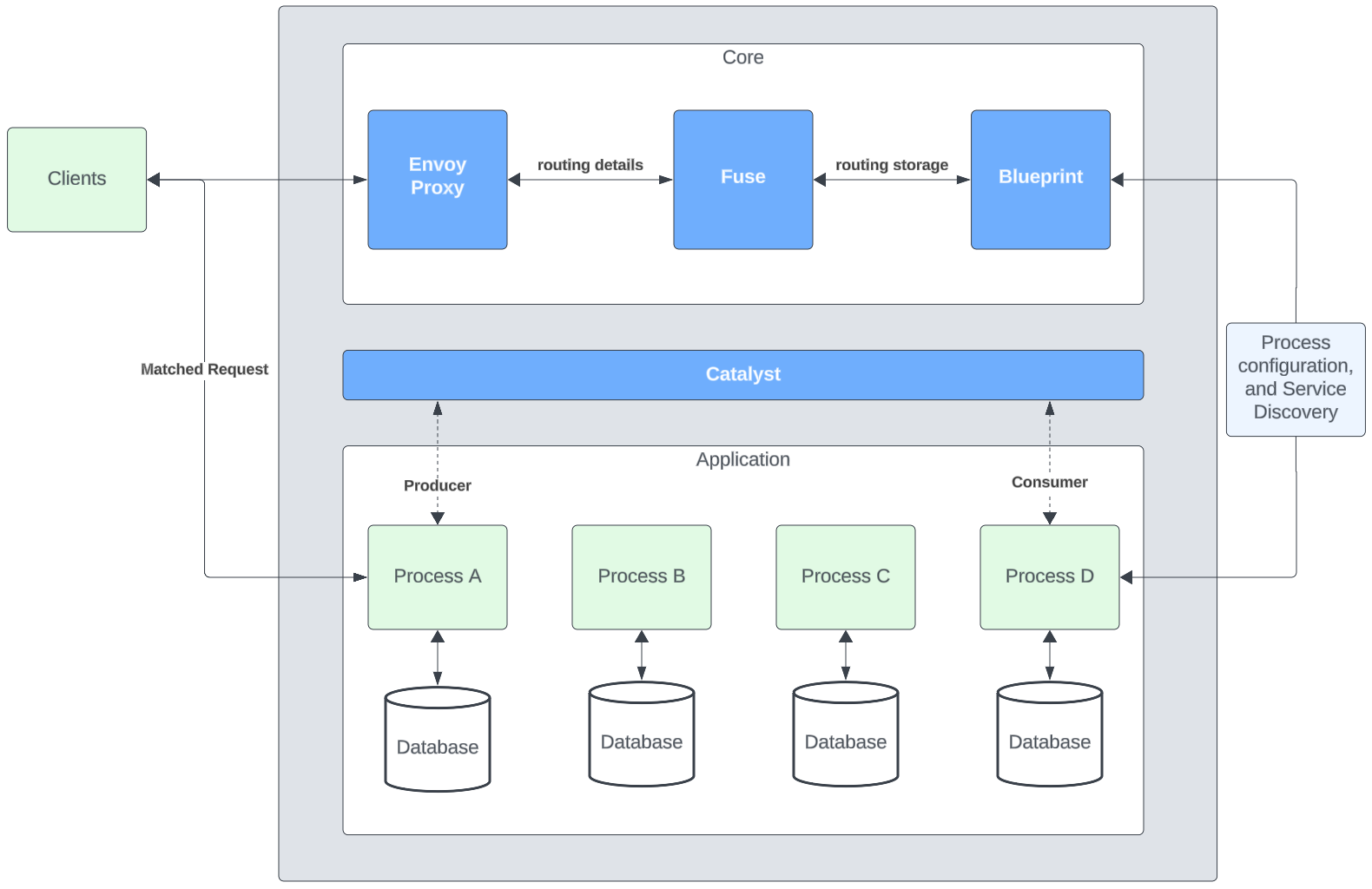

Core Services

An overview of the core components of a Draft cluster

A Draft cluster is made up of a few core services that are used to store your system configuration, handle service discovery, manage routing, and stream events.

- Blueprint- A distributed key/value store and service registry for handling service discovery.

- Fuse - A control plane handling routing configuration to services running.

- Catalyst - An event streaming interface for real-time message production and consumption.

Additionally, envoy is used as the ingress controller to the system. Envoy is controlled by routing rules configured through Fuse.

See below for a simple visual of a Draft cluster.

Blueprint

Blueprint is a distributed key/value store and service registry. Blueprint is built to handle heavy write workloads while also maintaining data consistency between all nodes in the cluster.

Blueprint uses the Raft Consensus Algorithm to establish a leader of the cluster and to maintain quorum between the cluster nodes so that it can gracefully handle node failure and network partitions. If the leader is lost to the cluster a new election begins and another leader is agreed upon. For simplicity, only one Blueprint node (the leader) can accept a write request and write it to the LSM tree. If a follower node receives a write request then it automatically forwards the request to the leader and informs the client of the leader’s connection details.

Blueprint also uses a combination of an LSM tree and a FSM to efficiently store data on the file system and guarantee each object will be written to all nodes of the cluster correctly. This design gives any dependent system an efficient and reliable key/value storage layer.

Blueprint utilizes its own internal key/value store to provide the role of service registry, health, and wellness checks of registered Draft processes. You can think of a service registry as the system journal of a distributed system (consider systemd as an example of this). Currently, Blueprint is not responsible for scheduling additional resources in case of failure (though we are considering this for the future) so its main responsibility is to keep track of the state and details of registered Draft processes. Two specific details are important and kept up to date.

- Process Running State - A value retained to determine if a process is ready for certain kinds of traffic.

- Process Health State - The healthiness state of the process.

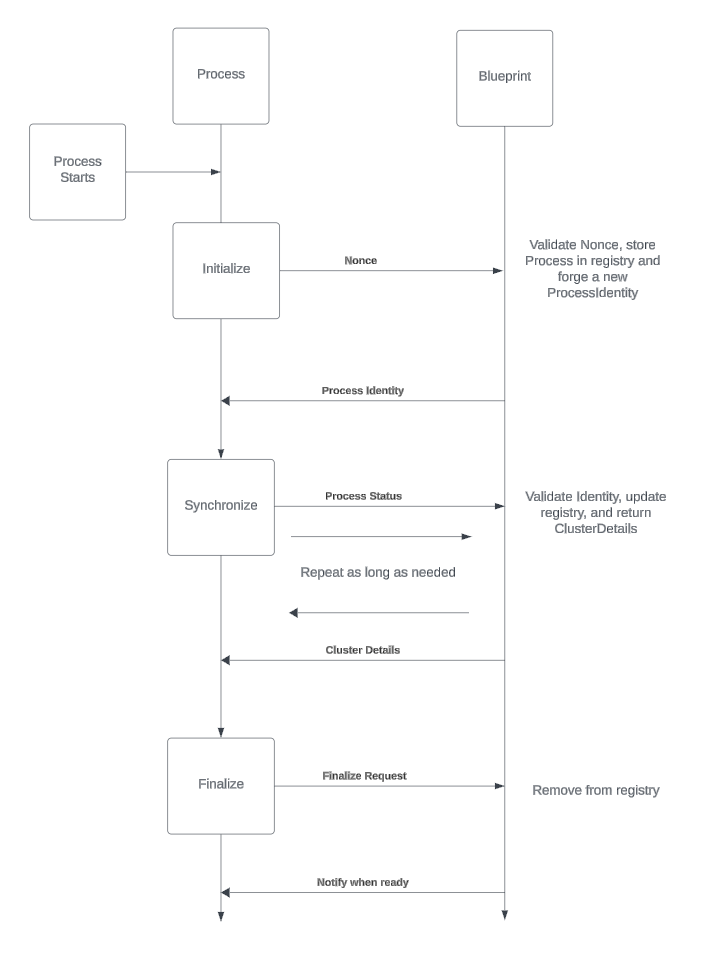

Process Registration

A Draft process must be provided with an entrypoint into the cluster at boot. This entrypoint is the address of a Blueprint node within the cluster. At boot, the process will begin the registration flow which is outlined below:

- A new Process sends a nonce to Blueprint

- Blueprint will validate the nonce, create a Process Identity and add the process to its system registry

- The registering process will open a bi-directional RPC stream to the registry pushing it’s Running state and Health state to the registry at a background polling rate

- Blueprint will update its registry with any new details pushed over the above stream from the process and ack each message with the current Blueprint cluster details

- When the process is ready to leave the cluster, it wo;; request to be removed from the registry and then close the bi-directional stream

See below for a diagram of the process registration flow:

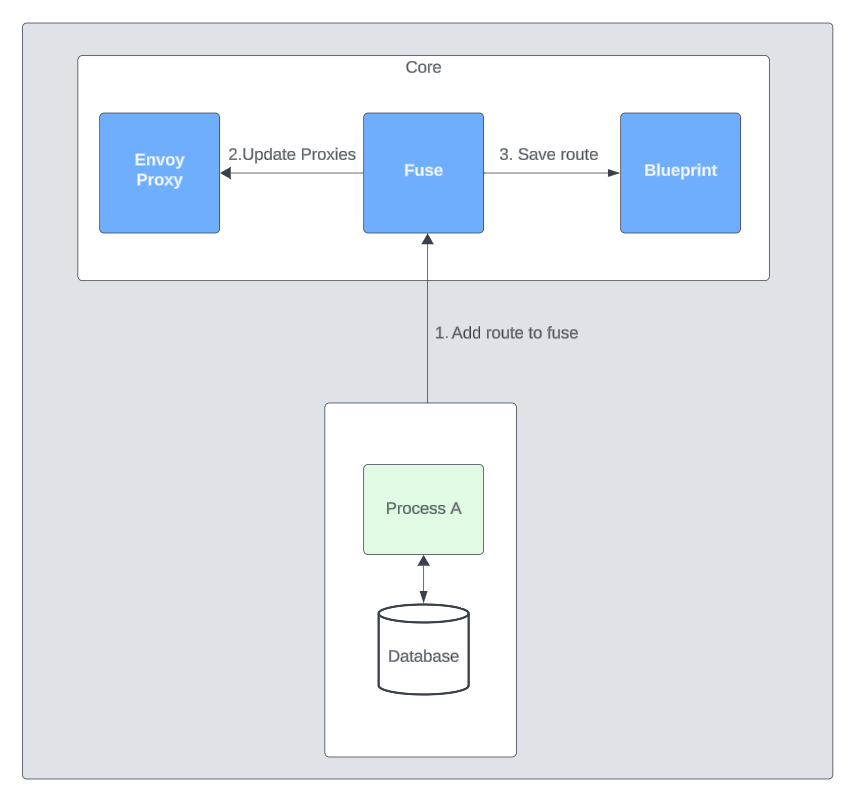

Fuse

Fuse is the control plane for a draft cluster. When a service/process would like to expose routes to the outside world then a route can be registered with Fuse. Currently we only support envy as the ingress proxy, however we are considering supporting various proxies in the future. Below is how a route is established in the control plane.

- Once a process has registered to Blueprint, the Fuse address is looked up and routing details are sent to Fuse.

- Fuse will update envoy with the new route table

- Optionally Fuse will store the routing information within Blueprint

Catalyst

Catalyst is the event interface of a Draft cluster. Catalyst can be run in two ways:

- As a standalone message bus that will handle event processing for all consumers and producers.

- As a pass-through interface for other message systems like Apache Kafka, Redpanda, and NATS. We are in early development of this component. If your interested in on collaborating with use please reach out.